At 1:30 a.m. I hopped out of bed, got dressed, and headed out for my 2:00 a.m. date. I had set the alarm and tried to sleep, but I could only toss around in bed, rehearsing the adventure to come. The night was bitter cold, but my old Ford started on the first try. New snow sparkled under my lights as the tires crunched their way through the empty streets, but I paid no attention.

My mind was racing ahead to my rendezvous. Soon I was at my lady’s front door, knocking the snow off my shoes and letting myself in. Yes, I knew her intimately enough to have my own key. And I found her wide awake as usual, winking her familiar come-hither wink. I took a quick glance at her console, then mounted my tape on an empty drive.

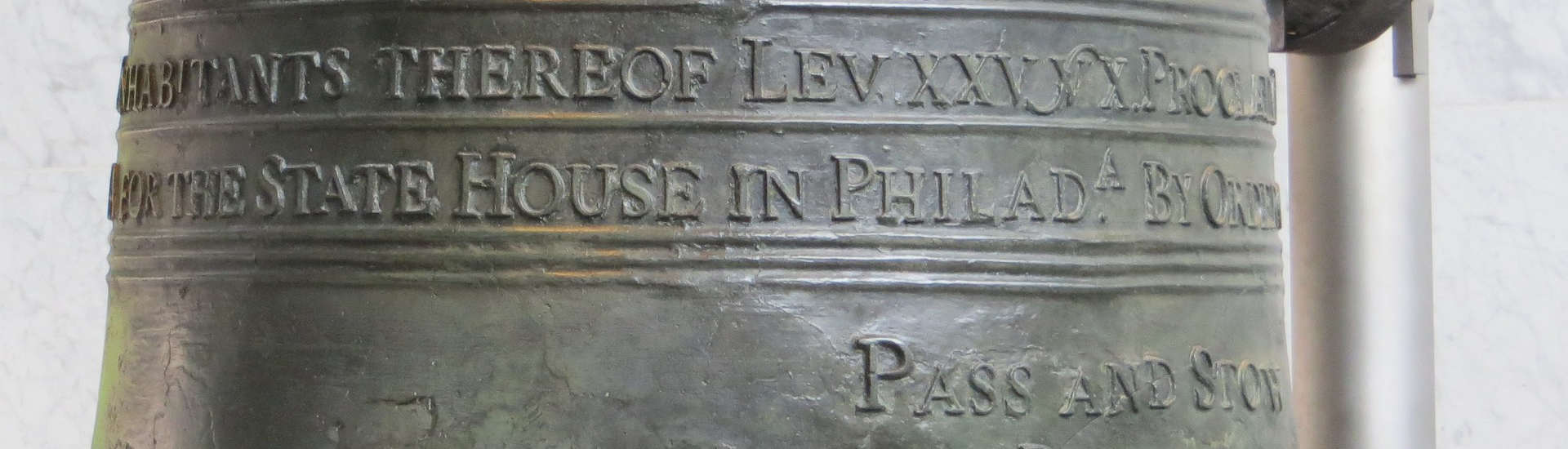

You see, the lady in question was a Univac 1107 Thin- Film Memory Computer – always a lady to me, never just a machine. Her “house” was the Jennings Computer Center on the campus of the Case Institute of Technology. It was 1966, and I was a young man in love, in love with Rosabeth, as I called her, though I never mentioned that name to anyone.

Was “love” too strong a word? Perhaps, but I was enthralled by the new technology. Computers in those days were all mainframes, ensconced behind glass walls. Anyplace else, a lowly user like me had to submit an input card deck at a window like a bank teller’s, wait till the next day, and pick up the resulting printout at another window. Case was one of the rare educational institutions at that time, perhaps the only one, that practiced an “open shop” policy. This meant that students could actually touch the computer – not just touch her but, in carefully proscribed ways, actually operate her.

This was not without risk. My Rosabeth was a multi- million dollar machine, and college students are what they are. Yet in the category of things that could be blamed on students, I recall nothing more serious than occasional jams in the card reader. The problems that I most remember were the lady’s frequent fainting spells, some of them lasting many hours. Although computers had changed from vacuum tubes to transistors some years earlier, thereby advancing significantly in reliability, they were still rather delicate creatures, prone to almost daily outages that, for all we students knew, might have been fits of the vapors.

I was lucky this winter night because the lady was wide awake and ready to go. I had reserved time from 2:00-4:00 a.m. because only at such times could a graduate student like me get the time needed to pursue his thesis work. Like all graduate students then and now, I felt it entirely natural to be up and about at such hours.

The 1107 worked strictly in what is called “batch mode.” This meant that users prepared an input card deck and fed it into a card reader, a machine that sensed the holes in the cards and converted them into data that were recorded in a

Night sessions were stressful, because the stakes were high. If I heard the dreaded clatter that signaled failure, the pressure was on.

temporary staging area on a magnetic storage device. When your job’s turn came, the console teletype machine rattled off an announcement of the start of your job; then you held your breath. If you heard it rattling again after a couple of seconds, announcing your job’s completion, you knew there had been an error. If it kept silent for a decent amount of time, you could be pretty sure your job was running properly. At least it was doing something, though there was no guarantee of good results. Some time later, a half-ton line printer would bang out your results on 1P/z-by-14 inch fanfold paper, which you paged through to find the good or bad news.

As I type this article, I go back and forth constantly, revising and fixing, revising and fixing. But imagine, if you will, using a computer to write an article in batch mode. Not that anybody ever did that. Word processors hadn’t been invented yet, and if they had, they would have been a frightful waste of a multimillion dollar machine. But writing our programs was somewhat like writing an article. We wrote them with a “high level” language called Algol that used a lot of English words and had rudimentary but strict rules of syntax and semantics. As students, we bought boxes of 2,000 cards for $2 each and sat down at a keypunch machine, if one was available. These were ugly, noisy beasts that fed blank cards into a punching station. Every time you hit a character on the keyboard, it would punch a column of holes, then advance the card by one column. There was no eraser, no un-punching holes. If you made a mistake you had to copy your bad card onto a fresh one, up to the point of the mistake, and then continue typing. A lot of spoiled cards and a lot of fanfold paper went into the dumpster in those pre-recycling days.

Of course, you didn’t really know if you had made a mistake until you tried to run your program. Any mistake – typo, syntax error, whatever – meant your job would fail and you would have to go find a keypunch, fix your mistake, get in line again, and have another go. Only later did I learn how much worse things were everywhere else, where under closed-shop rules users got maybe one or two turnarounds per day. The worst was Lockheed, Georgia, where I once visited. The keypunch operators belonged to a union. You were supposed to write what you wanted on paper forms and submit them to the operators, who in the fullness of time might get around to punching your job, perhaps accurately. Soon the local engineers showed me a hidden keypunch where we could get our work done in spite of the union.

The computer I’m using to write this article is a rather unremarkable desktop pc: dual AMD-64 processor, two gigabytes of memory, a few hundred gigs of disk space. In 1966, bytes hadn’t been invented, but it’s possible to estimate that my desktop machine has roughly four thousand times as much main memory as the 1107 and is a thousand times faster. Its Linux operating system is much more sophisticated than the 1107’s batch system, and the free OpenOffice suite I use would have been unimaginable back then. As to non- volatile storage, I have several hundred gigabytes on disks, while the 1107 had no disks at all, only a magnetic drum that was to disks as Edison’s cylinders were to phonograph records. I’m sure the capacity of that drum was well under a megabyte.

But wait – do you have any idea of how a computer disk works? Take one apart some time and study it. Then go to howstuffworks.com. In its precise tolerances, fast response, high storage density, long life, and low cost, the disk is an engineering triumph. You live in an age of miracles! So show some gratitude!

Forgive me. I get passionate at times. Back to my story.

The 1107’s operating system had the entire drum to itself, so it wasn’t available for user data. But faculty and grad students could have their own magnetic tapes for storing pro- grams and data. A reel of magnetic tape could hold as much as several dozen boxes of cards, but it was tape, which meant sequential access. If you wanted a file near the end of the tape you had to wait while the drive wound it forward to the proper spot. It usually did this without breaking or tangling your tape. Usually. Oh, you want to replace the fourth file on a tape? No problem: get a fresh tape, copy the first three files from the old tape, write the new file, skip ahead one file on the old tape, then copy the rest. You have to punch all the commands on cards, of course.

Night sessions were stressful, not because the hours were late but because the stakes were high. My job, if it ran successfully, would take most of two hours to complete. But if I heard the dreaded clatter that signaled failure, the pressure was on. I would scan my printout frantically, looking for the error, while Rosabeth sat there winking. And if I had my wits about me, I would take another few moments to review the whole program, because the machine always stopped at the first error, leaving subsequent errors to be caught on the next run.

Those nights have faded into a dim but very fond memory. Hobbled as we were by operating conditions unimaginable to the engineering students I now teach, ours were happy times – frustrating, exhilarating, and like all of life at its best, lived to the fullest.

For me, the excitement wasn’t just using the computer but seeing the methods we were developing to solve engineering problems. I was never what would later be called a “computer scientist,” only a user. I had entered Case as a civil engineering major but switched my emphasis when I had the great good fortune to work under the tutelage of Prof. Lucien Schmit, one of the pioneers of something called the “finite element method.” This is now a standard part of the engineer- ing repertoire, and I presently teach it to undergraduates at Santa Clara University. But in those days it was a new way of performing structural analysis – computing the deformation of an airplane wing, for example, in response to various loads. At the heart of the method is a lot of matrix algebra that can only be done with a computer. Finite element analysis was a huge advance over previous methods, which required laborious hours with a slide rule, yet produced only rough answers.

Fast forward to 1983. It’s a sunny spring day outside a small building in Palo Alto, where a software firm had gone out of business. I’m trying to figure how best to load our new printer onto a rented truck. It’s a whiffy, barely used, late- model Printronix that can print lower-case as well as upper- case characters and even produce rudimentary graphics. It’s nowhere near as heavy as the monsters I grew up with back at Case, but I’m very concerned about loading it carefully. I’ve just written a $4,000 check to pay for it, keenly aware of how long it took to save that much money and how fast money goes when you’re starting a new company. Our new three- man engineering consulting firm needed a computer, and with personal computers barely out of the hobbyist stage, our only practical choice was a minicomputer.

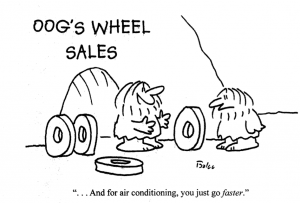

The minicomputer revolution began in the late 1960s, when an innovator by the name of Ken Olsen began build- ing computers that were small enough and cheap enough to be affordable by an engineering division in a large corporation. But at that time computers were the jealously guarded domain of corporate headquarters, and in order to get to his customers in engineering, Olsen decided to avoid the word “computer,” instead calling his machines “Programmable Data Processors.” The bean-counters were none the wiser, and the PDP line of minicomputers – designed, built and sold by Olsen’s Digital Equipment Corporation – began its wildly successful run.

Our PDP-11/34 required only two four-foot-high racks. We splurged, investing extra memory to get a total of 256k (yes, 256 kilobytes). The machine was certainly a “mini” in comparison to the mainframes; still, it ran on 220-volt power and required special air conditioning. Ah, but it was all ours, and it felt great to sit at our desks and do our work through our very own individual remote video terminals, which could display a full 24 lines of 80 characters each! The advanced time-sharing operating system, RSX/11-M, made it seem to each user that he had complete control of the machine. No more card decks!

Some of our work involved finite element analysis, which by then had found its way into commercial software. But that software still required a mainframe, so we got a modem to connect our PDP-II to distant commercial data centers that made their facilities available on per-minute charges. (We actually owned the modem. Back in the ’70s you could only lease them from Ma Bell, at about $100 per month for a 4k modem.) Since even a medium-size finite element analysis could consume an hour of mainframe time, we had to be very careful not to waste any of those expensive minutes.

Later we got our very own VAX-II, Digital’s successor to the PDP-II line. It was a leap ahead to 32-bit addressing and virtual memory, and having a VAX quickly became de rigueur in engineering circles. Although all we could afford was a stripped-down VAX 11/750, we were at last able to run small finite element analyses in-house: no more commercial usage charges.

A memory that has stuck with me from the 1970s is a disk drive that was part of a VAX installation at a large institution. It was the size of a washing machine, cost about $30,000, and required monthly maintenance. It held roughly 30 megabytes. Today, for almost no money, you can buy a thumb drive at the drug store that holds 200 times as much. No wonder I some- times get my kilos, megas, and gigas mixed up. And terabytes (a million million bytes) are coming, followed by petabytes.

The mainframes we had accessed (and occasionally still did, at customer facilities) were not made by Univac or even IBM, but rather by the upstart Control Data Corporation. A genius called Seymour Cray had developed a line of scientific computers for CDC that were geared for mathematical computations rather than business data processing. They left the mighty IBM in the dust. Thomas Watson, Jr., president of IBM, once wondered out loud how it was that Cray, with a team of just 32 people, “including the janitor,” was able to flummox IBM’s team of hundreds of engineers that had designed its ill-starred Stretch computer. Cray harrumphed in reply, “Mr. Watson has answered his own question.”

Seymour Cray left CDC and founded Cray Research in his hometown of Chippewa Falls, Wisconsin. Control Data never really recovered, as its founder William Norris began put- ting altruism ahead of profit-seeking. Norris built plants in inner-city neighborhoods and poured millions into a doomed information retrieval system called PLATO, meant for use in schools. Meanwhile Cray turned out ever-faster supercomputers from his modest digs in Chippewa Falls. I was privileged to use some of Cray’s machines, but always in batch mode at large government facilities.

Jump ahead to 1989. We make a short trip to a nearby startup called Silicon Graphics for a look at one of their new graphics workstations. Silicon Graphics was following closely behind Sun Microsystems, which had pioneered Unix work- stations. Graphic display can require intense computation, and SGI’s innovation was to embed much of that process- ing in hardware rather than burdening the CPU with all the

graphics software. These machines were ideally suited for finite element analysis because of the visual nature of that work, and we were hooked. We got one for a mere $40,000. It hadn’t felt this good since Rosabeth!

SGI raced ahead with bigger and faster machines, and its stock soared~ I recall visiting SGI headquarters around 1992, when the company was flying high – fancy digs, free foo~ for employees, and plenty of hubris. I should have known It was-riding for a fall. SGI would be outflanked by the lIkes of NVidia and ATI, outfits that put graphics hardware on relatively cheap cards that would fit on a PC bus. Now Google occupies some of the old SGI buildings and offers its employees free food. I suspect that Google management knows that story well and is determined not to let hubris lead them down the same slope that finished SGI.

I’m going to spare you any tales from the personal computer and internet revolutions, because I have nothing special to telL We got Macs and PCs pretty much when everybody else d,id, an~ we got on the internet pretty much when every- body else did. But the computer revolution can reasonably be said to have started with the ENlAC in 1948. Having gotten on board in 1962, I was a firsthand witness to, and in a small way a participant in, most of that revolution. What a great privilege! It’s as if a professor of English had been alive from Chaucer through Rand. As I look back over it all, I see five themes, all of which have a distinctly libertarian flavor.

First theme: innovators are usually rebels. They are often just as good at devising ways to get around bureaucracies as they’re at devising technical breakthroughs. One of my heroes is the late Admiral Grace Hopper. I never met her and never never used her COBOL programming language, yet she’s still a hero tome. She made many contributions to computer science and led the way for women in industry and in the Navy, but what I value most about her is her attitude.”It’s easier to ask forgiveness,” she said, “than to ask permission.” If you have an idea, just do it, and present the bosses with the finished results. If it works, what can they say? If not, you just forget to mention. Google seems to understand this,. since it allows its engineers to work one day a week on a project of their choice. One wonders how Google engineers might manage to rebel against such permissiveness.

Seymour Cray was poured from a similar mold. To the end (he died tragically, in a car accident), and even when he was

heading Cray Research, Seymour personally designed and tested circuits for his computers. He hated bureaucracy and tried to suppress it in his company. The founders of Intel left

The computer industry embodies “creative destruction” – the notion that in free markets those Who fail are swept away or become fertilizer for those with better ideas. Failed firms must not be propped up.

the stifling bureaucracy of Fairchild Semiconductor to pursue development of integrated circuits. Steve Jobs and Steve Wozniak at Apple were rebels, as was Bill Gates in his early years. Back before 1960, some rebel at Case started the open- shop policy, probably without asking permission.

Second theme: the computer industry embodies economist Joseph Schumpeter’s famous “creative destruction” concept – the notion that in free markets those who fail are swept away or become fertilizer for those who follow with better idea. Failed firms must not be propped up. (Will someone please clue Obama in?)

Remington-Rand’s UNIVAC (an acronym for UNIV Automatic Computer) had gained nearly complete domination of the computer business by the time it gained fame in the 1952 presidential election. A young Walter Cronkite broadcast the returns on CBS television, aided by a UNIVAC I (an ancestor of Rosabeth) set up in the studio and programmed to pre- dict the result from early returns. The miracle is not so much that the machine called it right – which, that year, wasn’t hard to do – but that it didn’t shut down with a vacuum tube failure, as it typically did every 20 minutes or so. The public had no concept of computers at that time, and the newspapers began to call them”electronic brains.” YNIVAC’s dominance was so complete that its trade name almost became a generic substitute for “computer,” in the way in which Aspirin and Kleenex went from trade names to generics.

Remington-Rand had wiped out a competitor, Eckert- Mauchly, which was the first significant commercial computer company. Its two founders had the right technical background but were clueless as managers. Then IBM, whose Thomas Watson had not long before estimated the world-wide computer market at about half a dozen, woke up and leaped ahead of Remington-Rand. The latter firm, folowing a merger with Burroughs, lingered on as Unlsys, which still exists, barely.

Honeywell, General Electric, and RCA got nowhere In competition with IBM; and though Control Data took the scientific market away, the dominance of IBM in business, where the real money was, became nearly complete by the 1970s. IBM was so prominent that it was attacked with expensive antitrust litigation. But Digital Equipment put its high- end minicomputers up against IBM mainframes, and by 1993 IBM was reeling from competition from DEC and others. It managed to pull out of its slump, but although it still makes mainframes, it has given up on personal computers, laptops, disk drives, and printers.

By 1980, Digital had all the ingredients for the first personal computer but missed that boat when CEO Olsen wondered why anyone would ever want a computer at home. The remains of DEC were bought by Compaq, which was in turn swallowed by Hewlett-Packard. Cray Research faded soon after Seymour’s death and was sold to SGI. SGI went bankrupt. Apple rose and fell and rose again. Microsoft surged, then stalled. Sun is being bought by Oracle. Google is flying high. Stay tuned. But don’t ask to isolate anyone from competition, which is the road to progress.

Third theme: competition doesn’t preclude cooperation, or learning from others. The term “software” didn’t exist in Rosabeth’s day. Each manufacturer supplied its own operating system software with its mainframes, and users wrote whatever application programs they needed: the idea of sell- ing software hadn’t yet come up. Standardizing software across different vendors’ machines was not a priority, just as standard time wasn’t a priority across different towns in the Old West, before railroads.

But by 1962 standard programming languages were beginning to take hold. Writing programs that computers can interpret directly was and is a very tedious and error-prone undertaking. Computer pioneers had gotten the inspiration for a compiler, which is a computer program that translates user programs from a high-level language into a form the computer can run. Two languages were prominent in those days: ALGOL, which ran on UNIVAC and Burroughs machines, and Fortran (FORmula TRANslation), which ran on IBM computers. Grace Hopper’s COBOL came later. Fortran was terse and ugly; COBOL (Common Business-Oriented Language) was verbose and ill-suited to scientific computations. ALGOL was a good compromise; sadly, it lost out to the other two. Yet all of them were swept aside by C, the language in which virtually all modern applications, such as Word and Excel, are written.

In the early days, there was no proprietary application software such as word processors or office suites. But by the 1970s there were software collections written in Fortran that passed from hand to hand and were used by many engineers and scientists in their own programs. One I remember was LINPACK, for matrix algebra, and another was EISPACK, for eigenvalue analysis. You could get these collections by mail, as card decks or magnetic tapes. Al Gore hadn’t yet invented the internet. The people who were in the field found ways to cooperate and exchange.

Fourth theme: obstacles can be fun; hurdles can be opportunities. By the time I came upon the scene, running your application had become a two-step process. First the compiler read your ALGOL program from the first part of your card deck. If there were syntax errors, your job ended then and there; but if it compiled, the computer ran your trans- lated program, which would usually read data from the second part of your card deck. If there were errors during this second stage, such as attempting to divide by zero, your job would end at that point. Otherwise you would get a print- out of whatever results you called for in your program. Those results, of course, could be right or wrong. You presumably knew what you wanted, but the computer did exactly what you told it, which could be something quite different.

The main difficulty in writing Algol or Fortran programs was the limited memory available. If you were using matrices (arrays of numbers), as we did in finite element analysis, you could run out of memory very quickly. So we had to work hard to use memory judiciously. In fact, the vast majority of our effort was directed not to the engineering algorithm itself (the recipe) but to getting past the limitations and getting the darn machine to do what we wanted.

Well, did we feel deprived? Certainly not, because we were on the cutting edge, like the first automobile enthusiasts who spent most of their time and money getting their machines to start and run. Most of us weren’t much bothered by the hurdles we faced in getting our programs to run.

But I’m actually a bit sorry about that. Hurdles are opportunities for entrepreneurs to find ways of easing such burdens – while, if they’re lucky, making themselves rich. I now see the whopping big opportunity that was staring me in the face

CDC never really recovered from Cray’s departure, as its founder William Norris began putting altruism ahead of profit-seeking.

in the 1970s: to convert LINPACK and EISPACK into a commercial package that relieved users of most of the burden of writing Fortran programs. I was oblivious to that opportunity – but Cleve Moler wasn’t.

Moler is a brilliant, innovative mathematician, specializing in numerical analysis. He was one of the authors of LINPACK and EISPACK. He and two colleagues started the MathWorks in 1984, offering a commercial software package called MATLAB which enabled users to use the capabilities of LINPACK and EISPACK without writing Fortran programs. Over the years MATLAB has grown enormously in power and flexibility. It now has its own programming language and a vast array of specialized applications, many written by users and shared freely. Like Bill Gates, though on a much smaller scale, Cleve and company have made thousands of mathematicians, engineers, and scientists enormously more productive, while (very likely) getting rich and having a lot of fun. The MathWorks remains privately owned, so its financials aren’t available, but it now employs 2,200 people.

Fifth theme: free enterprise brings power – the power of the consumer. I have MATLAB open on my desktop as I write. The power it gives me exceeds the wildest dreams of anyone in Rosabeth’s day. Last year I helped persuade the Santa Clara engineering school to offer a course in MATLAB programming to civil and mechanical engineers rather than teaching them C programming. I’m now preparing to teach that course for the second time. Whenever I teach, I try to convey some of the awe I feel at the power available to us. I suppose that’s a little like listening to my grandfather telling of the days before telephones. You listen politely, and turn back to your computer. Its power is yours – but it is a gift of free enterprise.